Argument Retrieval for Controversial Questions 2021

Synopsis

- Task: Given a question on a controversial topic, retrieve relevant arguments from a focused crawl of online debate portals.

- Input: [corpus] [other]

- Submission: [submit]

Task

The goal of Task 1 is to support users who search for arguments to be used in conversations (e.g., getting an overview of pros and cons or just looking for arguments in line with a user's stance). Given a question on a controversial topic, the task is to retrieve relevant arguments from a focused crawl of online debate portals.

Data

Example topic for Task 1:

<topic>

<number>1</number>

<title>Is human activity primarily responsible for global climate change?</title>

</topic>The corpus for Task 1 is the args.me corpus; you may index the corpus with your favorite retrieval system. To ease participation, you may also directly use the args.me search engine's API for a baseline retrieval.

Other data for this task are the topics and quality judgements. [download]

Evaluation

Be sure to retrieve good ''strong'' arguments. Our human assessors will label the retrieved documents manually, both for their general topical relevance and for the rhetorical quality, i.e., "well-writtenness" of the document: (1) whether the text has a good style of speech (formal language is preferred over informal), (2) whether the text has a proper sentence structure and is easy to read, (3) whether it includes profanity, has typos, and makes use of other detrimental style choices.

The format of the relevance/quality judgment file:

qid 0 doc relqid: The topic number.0: Unused, always 0.doc: The document ID ("trec_id" if you use ChatNoir or the official ClueWeb12 ID).rel: The relevance judgment: 0 (not relevant) to 3 (highly relevant). The quality judgment: 0 (low, or no arguments) to 3 (high).

You can use the corresponding evaluation script to evaluate your run using the relevance judgments.

Submission

We encourage participants to use TIRA for their submissions to allow for a better reproducibility. Please also have a look at our TIRA quickstart—in case of problems we will be able to assist you. Even though the preferred way of run submission is TIRA, in case of problems you may also submit runs via email. We will try to quickly review your TIRA or email submissions and provide feedback.

Runs may be either automatic or manual. An automatic run must not "manipulate" the topic titles via manual intervention. A manual run is anything that is not an automatic run. Upon submission, please let us know which of your runs are manual. For each topic, include up to 1,000 retrieved documents. Each team can submit up to 5 different runs.

The submission format for the task will follow the standard TREC format:

qid Q0 doc rank score tag

With:

qid: The topic number.Q0: Unused, should always be Q0.doc: The document ID (the official args.me ID) returned by your system for the topicqid.rank: The rank the document is retrieved at.score: The score (integer or floating point) that generated the ranking. The score must be in descending (non-increasing) order. It is important to handle tied scores.tag: A tag that identifies your group and the method you used to produce the run.

An example run for Task 1 is:

1 Q0 Sf9294c83-Af186e851 1 17.89 myGroupMyMethod

1 Q0 Sf9294c83-A9a4e056e 2 16.43 myGroupMyMethod

1 Q0 S96f2396e-Aaf079b43 3 16.42 myGroupMyMethod

...TIRA Quickstart

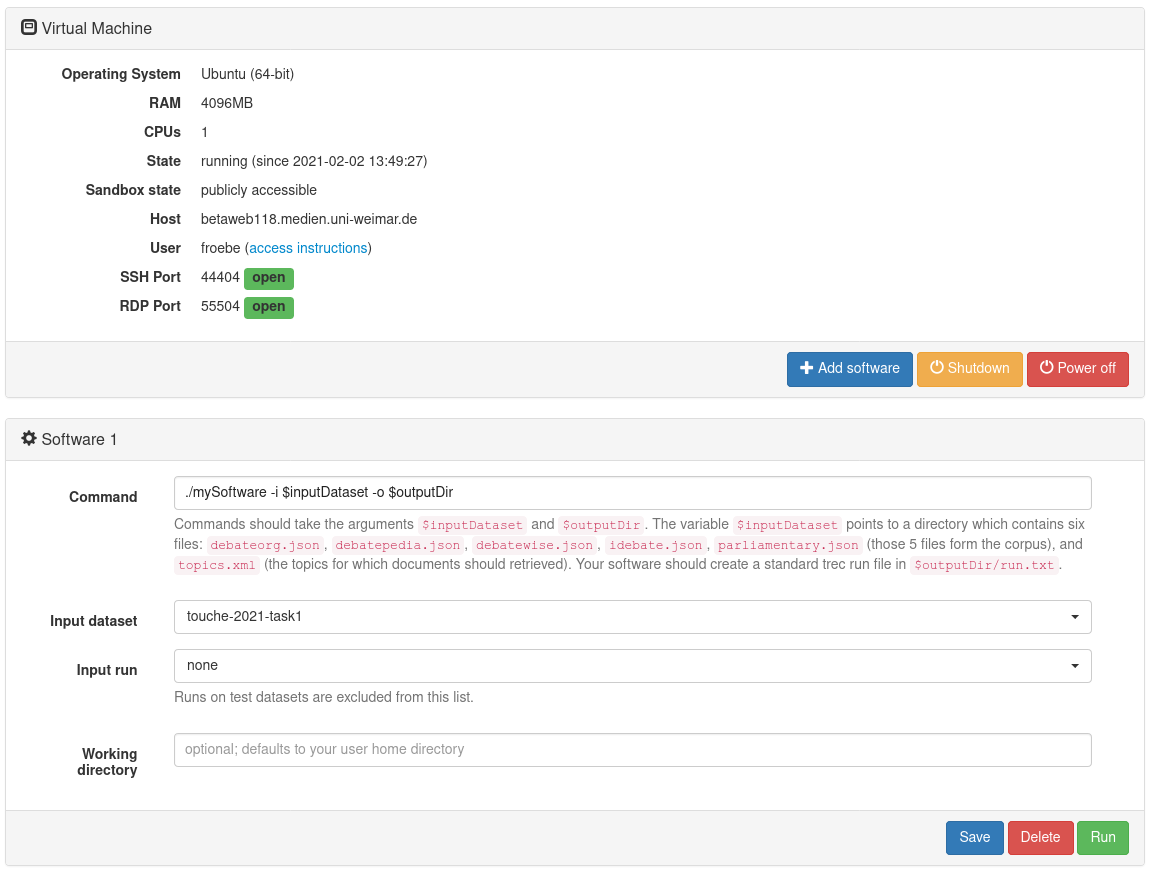

Participants have to upload (through SSH or RDP) their retrieval models in a dedicated TIRA virtual machine, so that their runs can be reproduced and so that they can be easily applied to different data (of same format) in the future. You can find host ports for your VM in the web interface, same login as to your VM. If you cannot connect to your VM, please make sure it is powered on: you can check and power on your machine in the web interface.

Your software is expected to accept two arguments:

- An input directory (named

$inputDatasetin TIRA). The variable$inputDatasetpoints to a directory which contains six files:debateorg.json,debatepedia.json,debatewise.json,idebate.json,parliamentary.json(those 5 files form the corpus), andtopics.xml(the topics for which documents should be retrieved). - An output directory (named

$outputDirin TIRA). Your software should create a standard trec run file in$outputDir/run.txt.

Your Software can use the args-me.json file or the API of the search engine args.me to produce the run file.

As soon as your Software is installed in your VM, you can register it in TIRA.

Assume that your software is started with a bash script in your home directory called my-software.sh which expects an argument -i specifying the input directory, and an argument -o specifying the output directory. Click on "Add software" and specify the command my-software.sh -i $inputDataset -o $outputDir. The other fields can stay with default settings.

Click on "Run" to execute your software in TIRA. Note that your VM will not be accessible while your system is running – it will be “sandboxed”, detached from the internet, and after the run the state of the VM before the run will be restored. Your run will be reviewed and evaluated by the organizers.

NOTE: By submitting your software you retain full copyrights. You agree to grant us usage rights for evaluation of the corresponding data generated by your software. We agree not to share your software with a third party or use it for any purpose other than research.

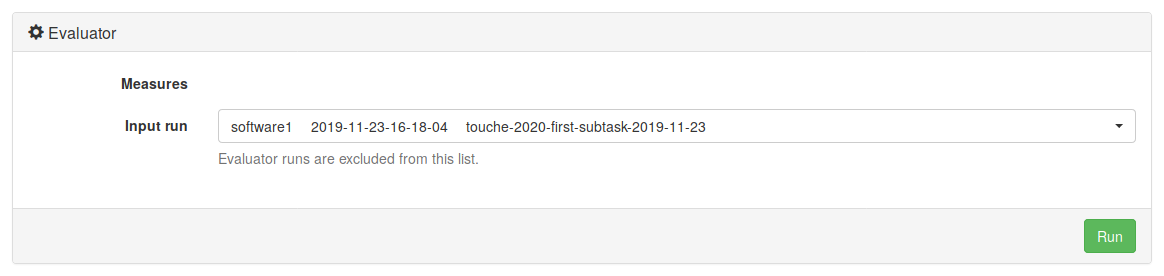

Once the run of your system completes, please also run the evaluator on the output of your system to verify that your output is a valid submission. These are two separate actions and both should be invoked through the web interface of TIRA. You don’t have to install the evaluator in your VM. It is already prepared in TIRA. You should see it in the web interface, under your software, labeled “Evaluator”. Before clicking the “Run” button, you will use a drop-down menu to select the “Input run”, i.e. one of the completed runs of your system. The output files from the selected run will be evaluated.

You can see and download STDOUT and STDERR as well as the outputs of your system. In the evaluator run you will see only STDOUT and STDERR, which will tell you if one or more of your output files is not valid. If you think something went wrong with your run, send us an e-mail. Additionally, we review your submissions and contact you on demand.

You can register more than one system (“software/ model”) per virtual machine using the web interface. TIRA gives systems automatic names “Software 1”, “Software 2” etc. You can perform several runs per system.

Results

| team | results | |

|---|---|---|

| Tag | nDCG@5 | |

| Elrond | ElrondKRun | 0.720 |

| Pippin Took | seupd2021-rck-stop-kstem-doShingle-false-shingle-size-0-Dirichlet-mu-2000.0-topics-2021 | 0.705 |

| Robin Hood | robinhood_combined | 0.691 |

| Asterix | run2021_Mixed_1.625_1.0_250 | 0.681 |

| Dread Pirate Roberts | dreadpirateroberts_lambdamart_small_features | 0.678 |

| Skeletor | bm25-0.7semantic | 0.667 |

| Luke Skywalker | luke-skywalker | 0.662 |

| Shanks | re-rank2 | 0.658 |

| Heimdall | argrank_r1_c10.0_q5.0 | 0.648 |

| Athos | uh-t1-athos-lucenetfidf | 0.637 |

| Goemon Ishikawa | goemon2021-dirichlet-lucenetoken-atirestop-nostem | 0.635 |

| Jean Pierre Polnareff | seupd-jpp-dirichlet | 0.633 |

| Swordsman (Baseline) | Dirichlet | 0.626 |

| Yeagerists | run_4_chocolate-sweep-50 | 0.625 |

| Hua Mulan | args_naiveexpansion_0 | 0.620 |

| Macbeth | macbethPretrainedBaseline | 0.611 |

| Swordsman (Baseline) | ARGSME | 0.607 |

| Blade | bladeGroupBM25Method1 | 0.601 |

| Deadpool | uh-t1-deadpool | 0.557 |

| Batman | DE_RE_Analyzer_4r100 | 0.528 |

| Little Foot | whoosh | 0.521 |

| Gandalf | BM25F-gandalf | 0.486 |

| Palpatine | run | 0.401 |

| team | results | |

|---|---|---|

| Tag | nDCG@5 | |

| Heimdall | argrank_r1_c10.0_q10.0 | 0.841 |

| Skeletor | manifold | 0.827 |

| Asterix | run2021_Jolly_10.0_0.0_0.3_0.0__1.5_1.0_300 | 0.818 |

| Elrond | ElrondOpenNlpRun | 0.817 |

| Pippin Took | seupd2021-rck-stop-kstem-doShingle-false-shingle-size-0-Dirichlet-mu-1800.0-expanded-topics-2021 | 0.814 |

| Goemon Ishikawa | goemon2021-dirichlet-lucenetoken-atirestop-nostem | 0.812 |

| Hua Mulan | args_gpt2expansion_0 | 0.811 |

| Yeagerists | run_4_chocolate-sweep-50 | 0.810 |

| Dread Pirate Roberts | dreadpirateroberts_lambdamart_medium_features | 0.810 |

| Robin Hood | robinhood_baseline | 0.809 |

| Luke Skywalker | luke-skywalker | 0.808 |

| Macbeth | macbethBM25CrossEncoder | 0.803 |

| Jean Pierre Polnareff | seupd-jpp-dirichlet | 0.802 |

| Athos | uh-t1-athos-lucenetfidf | 0.802 |

| Swordsman (Baseline) | DirichletLM | 0.796 |

| Shanks | LMDSimilarity | 0.795 |

| Blade | bladeGroupLMDirichlet | 0.763 |

| Little Foot | whoosh | 0.718 |

| Swordsman (Baseline) | ARGSME | 0.717 |

| Batman | DE_RE_Analyzer_4r100 | 0.695 |

| Deadpool | uh-t1-deadpool | 0.679 |

| Gandalf | BM25F-gandalf | 0.603 |

| Palpatine | run | 0.562 |