Argument Retrieval for Controversial Questions 2022

Synopsis

- Task: Given a query about a controversial topic, retrieve and rank a relevant pair of sentences from a collection of arguments.

- Input: [data]

- Submission: [tira]

- Evaluation: [topics]

- Manual judgments (top-5 pooling): [qrels: relevance, quality, coherence]

Task

The goal of Task 1 is to support users who search for arguments to be used in conversations (e.g., getting an overview of pros and cons or just looking for arguments in line with a user's stance). However, in contrast to previous iterations of this task, we now require retrieving a pair of sentences from a collection of arguments. Each sentence in this pair must be argumentative (e.g., a claim, a supporting premise, or a conclusion). Also, to encourage diversity of the retrieved results, sentences in this pair may come from two different arguments.

Data

Example topic for Task 1:

<topic>

<number>1</number>

<title>Is human activity primarily responsible for global climate change?</title>

</topic>The corpus for Task 1 is a pre-processed version of the args.me corpus (version 2020-04-01) where each argument is split into sentences; you may index these sentences (and the complete arguments if you wish to) with your favorite retrieval system. To ease participation, you may also directly use the args.me search engine's API for a baseline retrieval and then extract the candidate pair of sentences. Duplicate sentences are an expected part of this dataset. This is because in some of the debate portals from which the args.me corpus was derived, individual arguments are mapped to the same conclusion (which is essentially the discussion topic/title on the corresponding portal). Moreover, we opted to preserve the duplicates to reflect the common information retrieval scenario where filtering out such documents is part of the pipeline. [download]

Evaluation

Be sure to retrieve a pair of ''strong'' argumentative sentences. Our human assessors will label the retrieved pairs manually, both for their general topical relevance and for their argument quality, i.e.,: (1) whether each sentence in the retrieved pair is argumentative (claim, supporting premise, or conclusion), (2) whether the sentence pair forms a coherent text (sentences in a pair must not contradict each other), (3) whether the sentence pair forms a short summary of the corresponding arguments from which these sentences come from; each sentence in the pair must ideally be the most representative / most important sentence of its corresponding argument.

The format of the relevance/quality judgment file:

qid 0 pair relqid: The topic number.0: Unused, always 0.pair: The pair of sentence IDs (from the provided version of the args.me corpus).rel: The relevance judgment: -2 non-argument (spam), 0 (not relevant) to 3 (highly relevant). The quality judgment: 0 (low) to 3 (high).

Submission

We encourage participants to use TIRA for their submissions to allow for a better reproducibility. Please also have a look at our TIRA quickstart—in case of problems we will be able to assist you. Even though the preferred way of run submission is TIRA, in case of problems you may also submit runs via email. We will try to quickly review your TIRA or email submissions and provide feedback.

Runs may be either automatic or manual. An automatic run must not "manipulate" the topic titles via manual intervention. A manual run is anything that is not an automatic run. Upon submission, please let us know which of your runs are manual. For each topic, include a minimum of 100 and up to 1000 retrieved sentence pairs. Each team can submit up to 5 different runs.

The submission format for the task will follow the standard TREC format:

qid stance pair rank score tag

With:

qid: The topic number.stance: The stance of the sentence pair ("PRO" or "CON").pair: The pair of sentence IDs (from the provided version of the args.me corpus) returned by your system for the topicqid.rank: The rank the document is retrieved at.score: The score (integer or floating point) that generated the ranking. The score must be in descending (non-increasing) order: it is important to handle tied scores.tag: A tag that identifies your group and the method you used to produce the run.

An example run for Task 1 is:

1 PRO S71152e5e-A66163a57__CONC__1,S71152e5e-A66163a57__PREMISE__2 1 17.89 myGroupMyMethod

1 PRO S6c286161-Aafd7e261__CONC__1,S6c286161-Aafd7e261__PREMISE__1 2 16.43 myGroupMyMethod

1 CON S72f5af83-Afb975dba__PREMISE__1,S72f5af83-Afb975dba__CONC__1 3 16.42 myGroupMyMethod

...Q0 as the value in the stance column.

Some examples do not contain the conclusion as part of the sentences column although it is present in the conclusion column. This is because we excluded very short conclusions (less than two words) from being a valid argument sentence. We observed that in many cases they did not convey any stance (e.g. home schooling). Since we cannot inspect every short conclusion manually, we applied the length heuristic to filter them. However, if you still want to retrieve such conclusions as part of the final output, please provide [doc_id]__CONC__1 as the corresponding sentence ID (e.g., S72f5af83-Afb975dba__CONC__1).

TIRA Quickstart

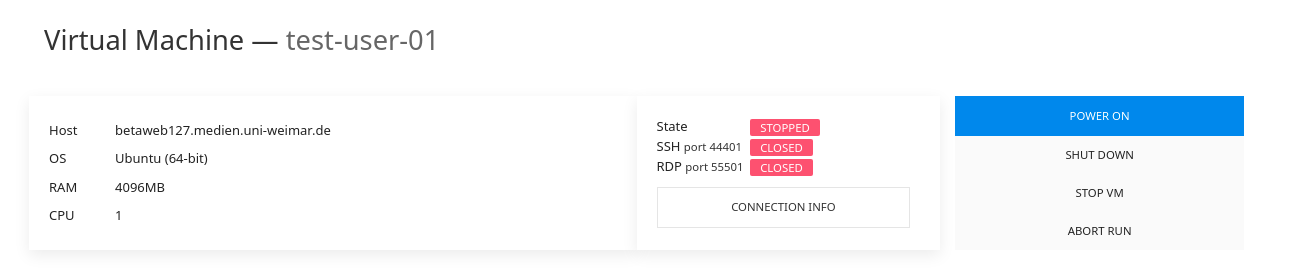

Participant software is run in a virtual machine. Log in to TIRA, go to the task's dataset page, and click on ">_ SUBMIT". Click the "CONNECTION INFO" button for how to connect to the virtual machine. Click on "POWER ON" if the state is not "RUNNING".

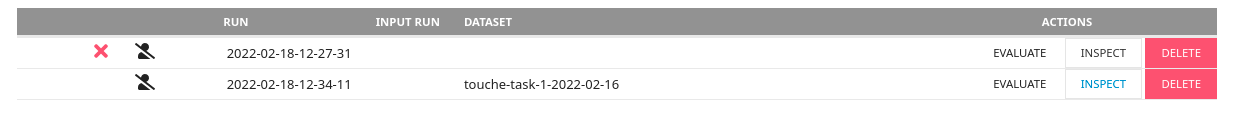

The software is executed on the command line with two parameters: (1) $inputDataset refers to a directory that contains the collection; (2) $outputDir refers to a directory in which the software has to create the submission file named run.txt. Specify exactly how each software of your virtual machine is run using the "Command" field in the TIRA web interface. Select touche-task-1-2022-02-16 as the input dataset.

As you "RUN" the software, you will not be able to connect to the virtual machine (takes at least 10 minutes). Once finished, click on "INSPECT" to check on the run and click on "EVALUATE" for a syntax check (give it a few minutes, then check back on the page). Your run will later be reviewed and evaluated by the organizers. If uncertain on something, ask in the forum or send a mail/message to Shahbaz.

Create a separate "Software" entry in the TIRA web interface for each of your approaches. NOTE: By submitting your software you retain full copyrights. You agree to grant us usage rights for evaluation of the corresponding data generated by your software. We agree not to share your software with a third party or use it for any purpose other than research.

Results

| Team | Tag | Mean NDCG@5 | CI95 Low | CI95 High |

|---|---|---|---|---|

| Porthos | scl_dlm_bqnc_acl_nsp | 0.742 | 0.670 | 0.807 |

| Daario Naharis | INTSEG-Letter-no_stoplist-Krovetz-Icoef-Evidence-Par | 0.683 | 0.609 | 0.755 |

| Bruce Banner | Bruce-Banner_pyserinin_sparse_v3 | 0.651 | 0.573 | 0.720 |

| D Artagnan | seupd2122-6musk-kstem-stop-shingle3 | 0.642 | 0.575 | 0.705 |

| Gamora | seupd2122-javacafe-gamoraHeuristicsOnlyQueryReductionDoubleIndex | 0.616 | 0.551 | 0.687 |

| Hit Girl | Io | 0.588 | 0.515 | 0.657 |

| Pearl | PearlBlocklist_WeightedRelevance | 0.481 | 0.399 | 0.560 |

| Gorgon | GorgonA2Bm25 | 0.408 | 0.354 | 0.461 |

| General Grievous | seupd2122-lgtm_QE_NRR | 0.403 | 0.335 | 0.471 |

| Swordsman | baseline_swordsman | 0.356 | 0.296 | 0.412 |

| Korg | korg9000 | 0.252 | 0.187 | 0.318 |

| Team | Tag | Mean NDCG@5 | CI95 Low | CI95 High |

|---|---|---|---|---|

| Daario Naharis | INTSEG-Letter-no_stoplist-Krovetz-Icoef-Evidence-Par | 0.913 | 0.870 | 0.947 |

| Porthos | scl_dlm_bqnc_acl_nsp | 0.873 | 0.825 | 0.913 |

| Gamora | seupd2122-javacafe-gamoraHeuristicsOnlyQueryReductionDoubleIndex | 0.785 | 0.729 | 0.848 |

| Hit Girl | Ganymede | 0.776 | 0.707 | 0.840 |

| Bruce Banner | Bruce-Banner_pyserinin_sparse_v1 | 0.772 | 0.702 | 0.830 |

| Gorgon | GorgonA2Bm25 | 0.742 | 0.700 | 0.786 |

| D Artagnan | seupd2122-6musk-stop-wordnet-kstem-dirichlet | 0.733 | 0.676 | 0.787 |

| Pearl | PearlArgRank7530 | 0.678 | 0.609 | 0.744 |

| Swordsman | baseline_swordsman | 0.608 | 0.543 | 0.671 |

| General Grievous | seupd2122-lgtm_NQE_NRR | 0.517 | 0.442 | 0.591 |

| Korg | korg9000 | 0.453 | 0.384 | 0.529 |

| Team | Tag | Mean NDCG@5 | CI95 Low | CI95 High |

|---|---|---|---|---|

| Daario Naharis | INTSEG-Run-Whitespace-Krovetz-Stoplist-Pos-Evidence-icoeff-Sep | 0.458 | 0.389 | 0.525 |

| Porthos | scl_dlm_bqnc_acl_nsp | 0.429 | 0.353 | 0.509 |

| Pearl | PearlArgRank7530 | 0.398 | 0.311 | 0.485 |

| Bruce Banner | Bruce-Banner_pyserinin_sparse_v1 | 0.378 | 0.300 | 0.459 |

| D Artagnan | seupd2122-6musk-kstem-stop-shingle3 | 0.378 | 0.311 | 0.452 |

| Hit Girl | Ganymede | 0.377 | 0.303 | 0.456 |

| Gamora | seupd2122-javacafe-gamora_sbert_kstemstopengpos_multi_YYY | 0.285 | 0.203 | 0.373 |

| Gorgon | GorgonKEBM25 | 0.282 | 0.233 | 0.335 |

| Swordsman | baseline_swordsman | 0.248 | 0.193 | 0.303 |

| General Grievous | seupd2122-lgtm_QE_NRR | 0.231 | 0.162 | 0.313 |

| Korg | korg9000 | 0.168 | 0.117 | 0.223 |